The following options appear on the Bagging, Boosting, and Random Trees dialogs.

Ensemble Methods Classification dialog, Data tab

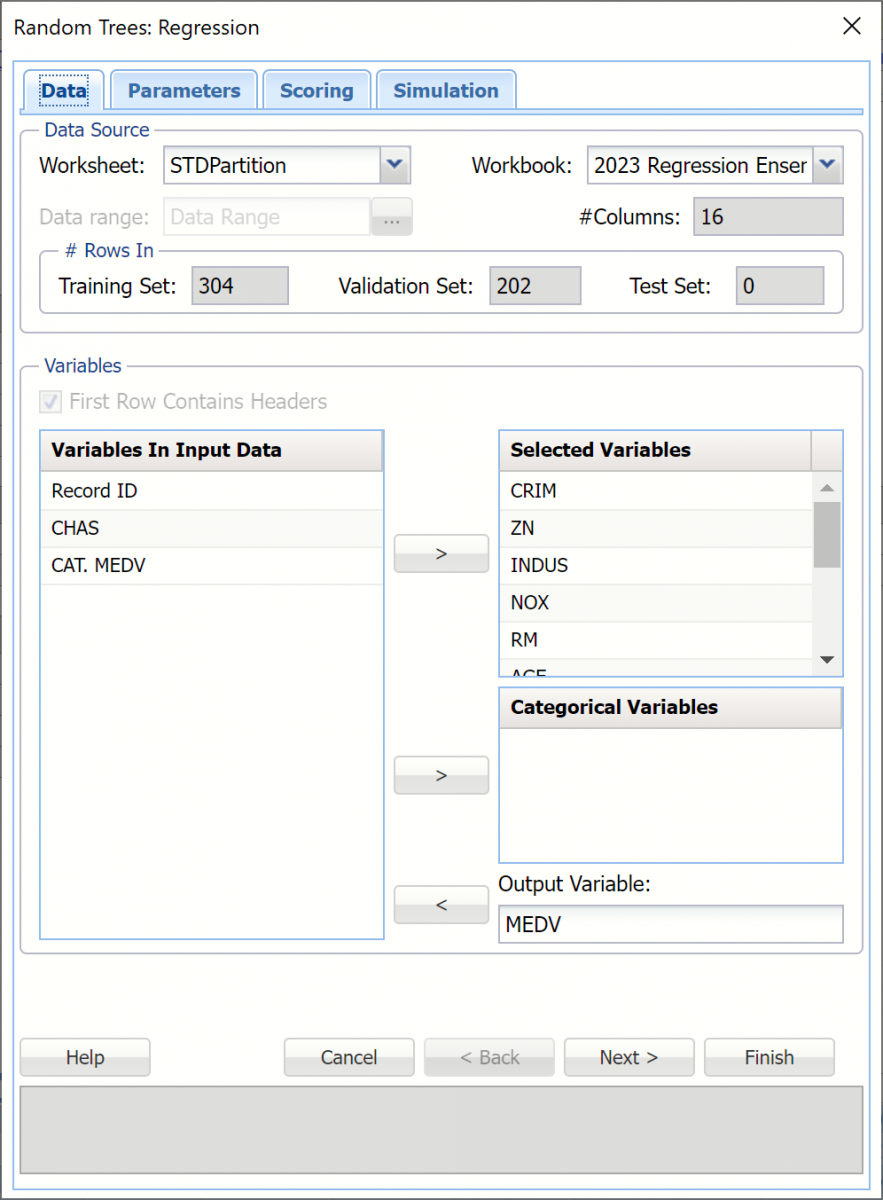

Please see below for options appearing on the Ensemble Methods- Data dialog. This dialog is the same for all three ensemble methods.

Variables In Input Data

Variables In Input Data

The variables included in the dataset appear here.

Selected Variables

Add continuous variables to be included in the model here..

Categorical Variables

Add categorical variables to be included in the model here.

Output Variable

The dependent variable or the variable to be classified appears here.

Ensemble Methods Classification dialog, Boosting Parameters tab

Please see below for options appearing on the Boosting Parameters dialog.

Partition Data

Partition Data

Analytic Solver Data Science includes the ability to partition a dataset from within a classification or prediction method by clicking Partition Data on the Parameters dialog. Analytic Solver will partition your dataset (according to the partition options you set) immediately before running the prediction method. If partitioning has already occurred on the dataset, this option will be disabled. For more information n partitioning, please see the Data Science Partitioning chapter.

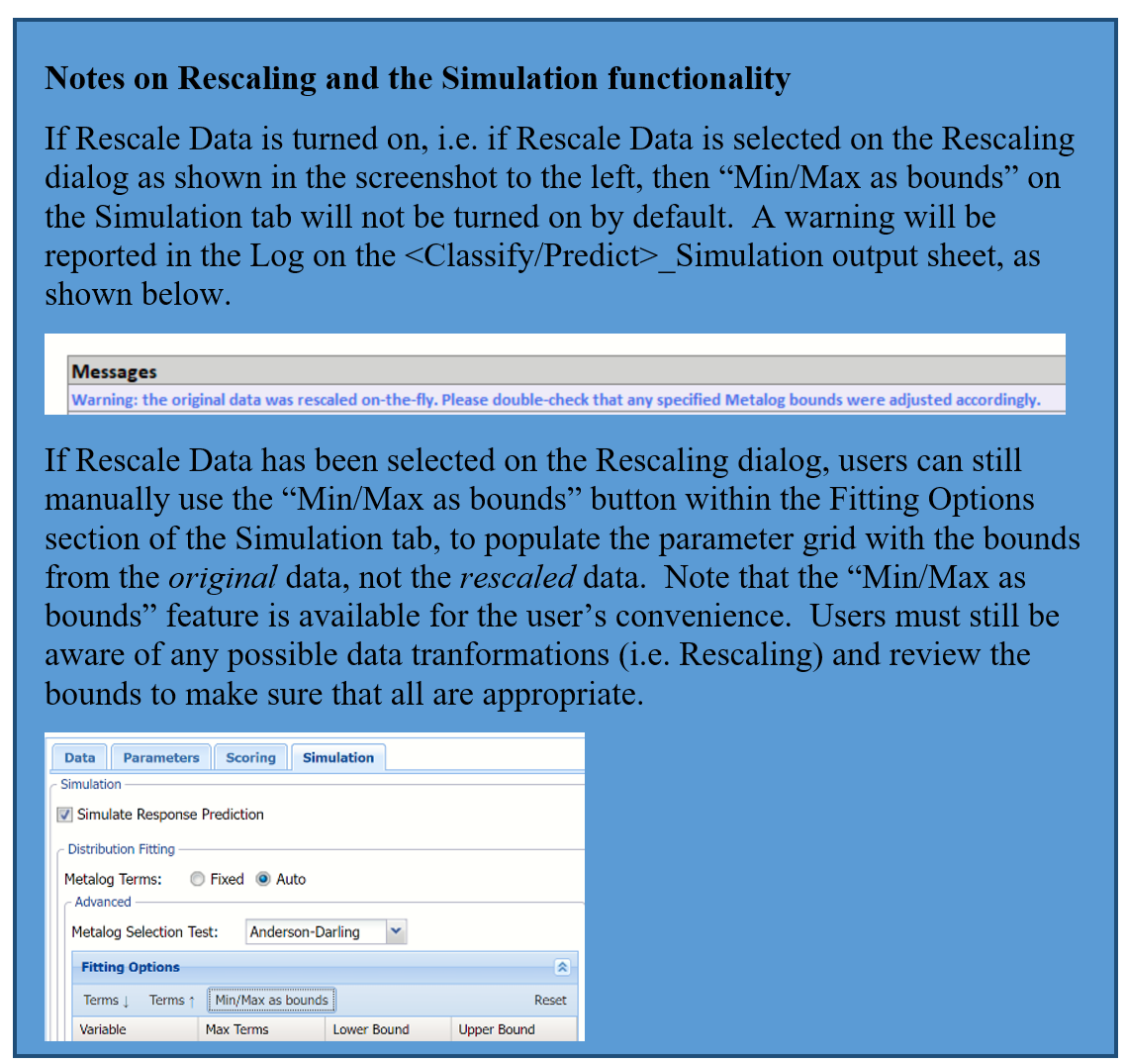

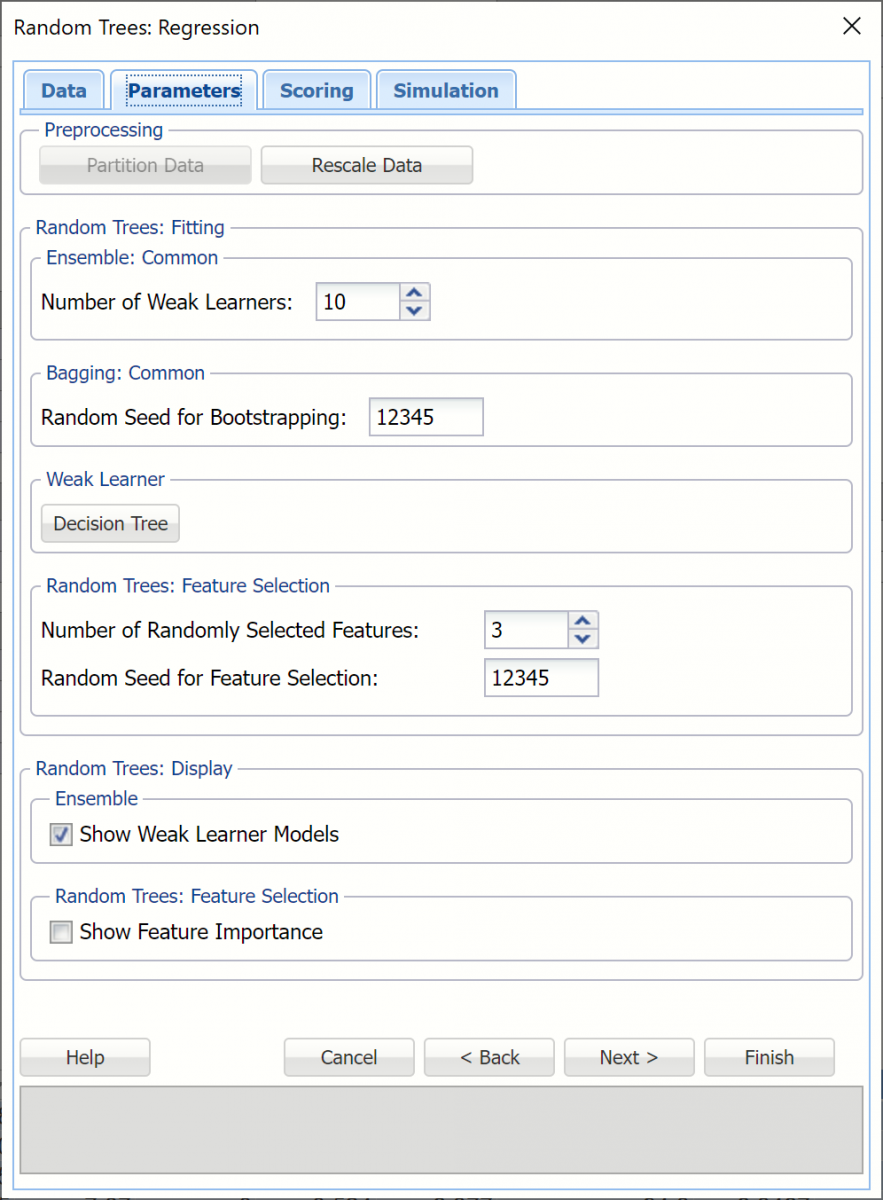

Rescale Data

Click Rescale Data to open the Rescaling dialog. Use Rescaling to normalize one or more features in your data during the data preprocessing stage. Analytic Solver Data Science provides the following methods for feature scaling: Standardization, Normalization, Adjusted Normalization and Unit Norm. For more information on this new feature, see the Rescale Continuous Data section within the Transform Continuous Data topic that occurs earlier.

Number of Weak Learners

This option controls the number of "weak" regression models that will be created. The ensemble method will stop when the number of regression models created reaches the value set for this option.

Weak Learner

Under Ensemble: Regression click the down arrow beneath Weak Leaner to select one of the four featured classifiers: Linear Regression, k-NN, Neural Networks, or Decision Trees. After a weak learner is chosen, the command button to the right will be enabled. Click this command button to control various option settings for the weak leaner.

Step Size

The Adaboost algorithm minimizes a loss function using the gradient descent method. The Step size option is used to ensure that the algorithm does not descend too far when moving to the next step. It is recommended to leave this option at the default of 0.3, but any number between 0 and 1 is acceptable. A Step size setting closer to 0 results in the algorithm taking smaller steps to the next point, while a setting closer to 1 results in the algorithm taking larger steps towards the next point.

Show Weak Learner

To display the weak learner models in the output, select Show Weak Learner Models.

Ensemble Methods Classification dialog, Boosting Parameters tab

Please see below for options unique to the Bagging - Parameters dialog.

Random Seed for Boostrapping

Random Seed for Boostrapping

If an integer value appears for Bootstrapping Random seed, Analytic Solver Data Science will use this value to set the bootstrapping random number seed. Setting the random number seed to a nonzero value (any number of your choice is OK) ensures that the same sequence of random numbers is used each time the dataset is chosen for the classifier. The default value is "12345". If left blank, the random number generator is initialized from the system clock, so the sequence of random numbers will be different in each calculation. If you need the results from successive runs of the algorithm to another to be strictly comparable, you should set the seed. To do this, type the desired number you want into the box. This option accepts both positive and negative integers with up to 9 digits.

Ensemble Methods Classification dialog, Bagging Parameters tab

Please see below for options that are unique to the Random Trees Parameters dialog. For remaining option explanations, please see above.

Number of Randomly Selected Features

Number of Randomly Selected Features

The Random Trees ensemble method works by training multiple "weak" classification trees using a fixed number of randomly selected features then taking the mode of each class to create a "strong" classifier. The option Number of randomly selected features controls the fixed number of randomly selected features in the algorithm. The default setting is 3.

Random Seed for Featured Selection

If an integer value appears for Feature Selection Random seed, Analytic Solver Data Science will use this value to set the feature selection random number seed. Setting the random number seed to a nonzero value (any number of your choice is OK) ensures that the same sequence of random numbers is used each time the dataset is chosen for the classifier. The default value is "12345". If left blank, the random number generator is initialized from the system clock, so the sequence of random numbers will be different in each calculation. If you need the results from successive runs of the algorithm to another to be strictly comparable, you should set the seed. To do this, type the desired number you want into the box. This option accepts both positive and negative integers with up to 9 digits.

Ensemble Methods Classification dialog, Random Trees Parameters tab

Please see below for options that are unique to the Ensemble Methods Scoring dialog.

Score Training Data

Score Training Data

Select these options to show an assessment of the performance of the Neural Network in predicting the value of the output variable in the training partition.

New in V2023: When Frequency Chart is selected, a frequency chart will be displayed when the NNP_TrainingScore worksheet are selected. This chart will display an interactive application similar to the Analyze Data feature, and explained in detail in the Analyze Data chapter that appears earlier in this guide. This chart will include frequency distributions of the actual and predicted responses individually, or side-by-side, depending on the user’s preference, as well as basic and advanced statistics for variables, percentiles, six sigma indices.

Score Validation Data

These options are enabled when a validation data set is present. Select these options to show an assessment of the performance of the Neural Network in predicting the value of the output variable in the validation data. The report is displayed according to your specifications - Detailed, Summary, and Lift charts. When Frequency Chart is selected, a frequency chart (described above) will be displayed when the NNP_ValidationScore worksheet is selected.

Score Test Data

These options are enabled when a test set is present. Select these options to show an assessment of the performance of the Neural Network in predicting the value of the output variable in the test data. The report is displayed according to your specifications - Detailed, Summary, and Lift charts. When Frequency Chart is selected, a frequency chart (described above) will be displayed when the NNP_TestScore worksheet is selected.

Score New Data

The options in this group apply to the model to be scored to an altogether new dataset. See the "Scoring New Data" chapter for details on these options.

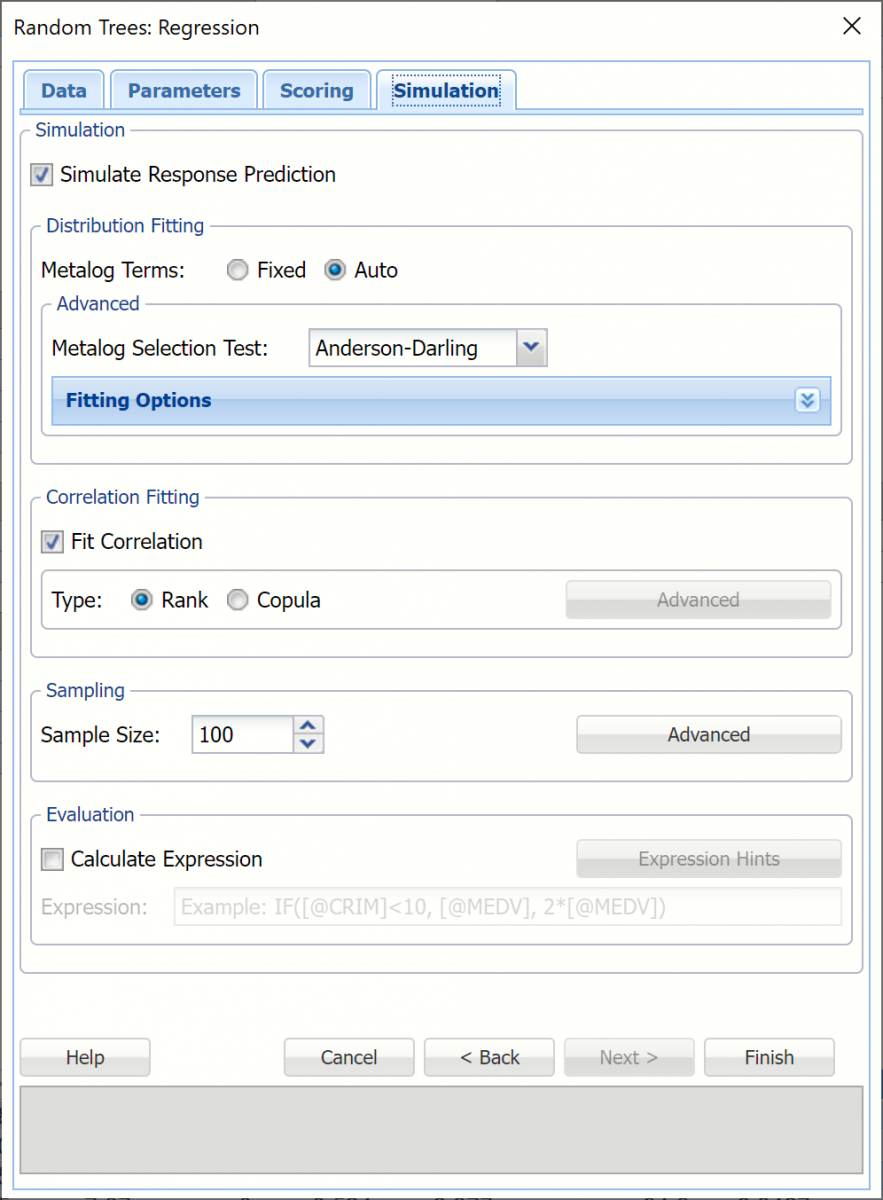

Ensemble Methods Classification dialog, Random Trees Parameters tab

All supervised algorithms in V2023 include a new Simulation tab. This tab uses the functionality from the Generate Data feature (described earlier in this guide) to generate synthetic data based on the training partition, and uses the fitted model to produce predictions for the synthetic data. The resulting report, NNP_Simulation, will contain the synthetic data, the predicted values and the Excel-calculated Expression column, if present. In addition, frequency charts containing the Predicted, Training, and Expression (if present) sources or a combination of any pair may be viewed, if the charts are of the same type.

Evaluation: Select Calculate Expression to amend an Expression column onto the frequency chart displayed on the RT_Simulation output tab. Expression can be any valid Excel formula that references a variable and the response as [@COLUMN_NAME]. Click the Expression Hints button for more information on entering an expression.